Information is Beautiful Award

As you know, I was never too fond of awards — until I won two of them in one night :)

emoto data sculpture

We just finished the documentation for emoto – a data art project visualising the online response to the Olympics London 2012.

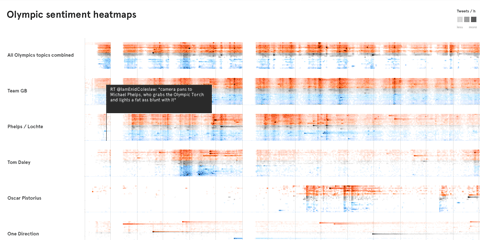

In many ways, the crowning piece of the project, and a conceptual counterpoint to the ephemeral web activities, our data sculpture preserved the more than 12 million tweets we collected in physical form. We had 17 plates CNC-milled — one for each day of the games — with a relief heatmap indicating the emotional highs and lows of each day. Overlay projections highlighted individual stories, and visitors could scroll through the most retweeted tweets per hour for each story using a control knob.

The tweets and topics displayed in the installation can also be investigated in interactive heatmaps. Rollover the rows to see a tooltip display of the most retweeted tweet on the given topic at the respective point in time.

Thanks so much to my fantastic collaborators at Studio NAND, and Drew Hemment and the team at and around Future Everything and everyone involved!

Plenty-plenty Sentimenti!

Find a brief documentation at moritz.stefaner.eu/projects/emoto/

or read more on the project here:

nand.io/visualisation/emoto-installation

nand.io/visualisation/emoto

Article and interview on Creators Project

Data Stories podcast episode #11 with Stephan Thiel on emoto

Images as datastore

In two current projects, we use images as a datastore in parts of our processing timeline, and that proved quite handy, so I thought, I would briefly share the technique with you.

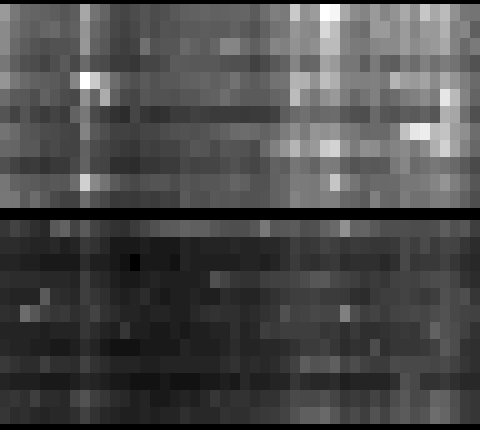

In the emoto project, we use 2D matrices to store how many tweets (brightness) fall in which sentiment category (vertical) over time (horizontal):

This is not exciting per se, but the trick here is that we use this as an elevation map for the 3D models we produce for the data sculpture. So the images are only a “messenger” between two processing steps – data analysis and the 3D modelling tool. Yet, in this form, it is much more easy to detect gaps, and get a glimpse of the data structure immediately. Also, think about it this way – if your database is an image, you can apply image transformation techniques to modify your data! (Think enhance contrast, minimum/maximum, slicing, blurring,…) What can be very difficult numeric operations if only working with numbers, can be very simple operations in Photoshop, and, again, the result is immediately inspectable. The catch is, when working with grey scale, you have only 256 steps available – but in our case, that was enough.

The second image trick is to use color as an identifier code in a 2D matrix. For instance, you might want to check in which country a certain point on earth is. You do have a list of polygons for each country, but how inefficient, error prone and tedious it is to loop through all of them, and calculate a hit test with a polygon… Also, how do you calculate a hit test with a polygon, anyways?

Now here is an incredibly simple way to do it: Pick a unique color for each country. Go through all the polygons of a country and draw them on a simple map mapping lat and long to x and y coordinates in the desired precision.

Now, for any point on earth, you just need to look up the color of the pixel belonging to its map coordinate, and – there you have the code of the corresponding country. Very handy! Again, all the difficult data processing has been taken care of by the image processing algorithm..

So, next time you have a tricky data transformation issue to solve – maybe image processing can be part of the solution! I am sure there are many more tricks along these lines to discover.

( + Thanks to Stephan and Steffen from Studio NAND for developing these workflows with me!)

emoto

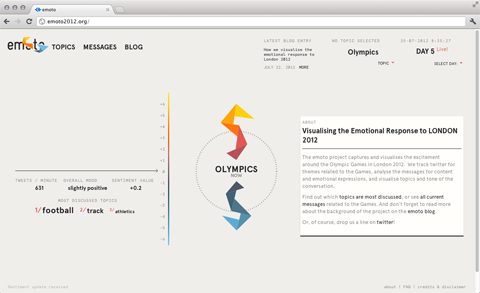

A true mamooth project has finally launched: emoto.

Together with a huge team around Drew Hemment and Studio NAND, and a partnership with MIT Senseable City Lab, we aim at visualising the online reponse to the Olympic Games for the London 2012 Festival and Cultural Olympiad in the Northwest.

Basically, the idea is to track Twitter messages for content (which topics, disciplines, athletes etc they refer) to and emotional tone (are they cheering, swearing, being indifferent) and make that info available real-time on http://emoto2012.org, as a supplement or even alternative to traditional ways of consuming the Games coverage.

Our goal is to reveal both the big picture as well as the little anecdotes that make up the big, big stream of messages.

After the games, we will turn the collected tweets into an actual physical object, to archive these ephemeral little “things flying by” forever.

And during the games, we are posting insights and in-depth analyses (here is a first post on the Opening Ceremony), so there is also a little data journalistic angle to the whole package.

I have to say, this is probably one of the most ambitious projects I have worked on this far, and despite some small rocks encountered along the way, I am really happy how it turned out.. I hope you like it, too!

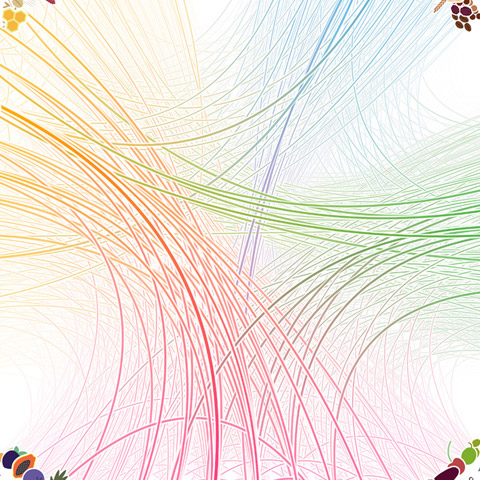

Data Cuisine

I am happy to announce my most out-there infovis related activity this year: The open data cooking workshop. Organized together with Prozessagenten and Miska Knapek, we will invite 15 participants to explore the data-expressive qualities of food together. Our idea is to cook food with local ingredients that represents local (open) data about the region where the workshop is. If you think about it, there are some many ways food can be used to express data: 2d painting/drawing, 3d sculpture, taste dimensions, texture, all the cultural connotations (potato vs. caviar), preparation processes and variables (e.g. automated oven temperature regulation), presentation, … The possibilities are endless!

Much looking forward. Thanks to pixelache and okfn for making this happen!

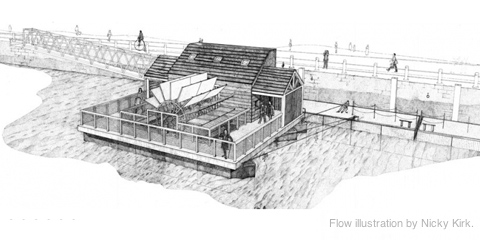

Tyne – simulation as visualization

Together with Stephan Thiel (who did all the heavy lifting) from NAND.io, I am happy to present a small new visualization: Tyne, a visualization of the sensor data generated by ~flowmill, a tide mill floating on the river Tyne, in Newcastle.

Stephan has already a great write-up on the nand.io site, which I recommend reading first, so here are a few comments beyond this project description:

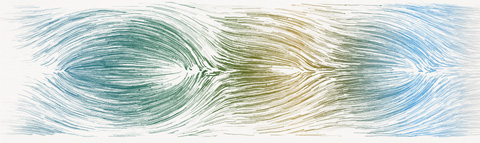

What I find quite interesting about the project is the use of simulation as visualization. Although we used little image thumbnails as icons for each visualization, the actual visualization is in fact a particle simulation which is seeded with the five sensor values measured at a given point in time. Four of these are used as physics parameter – expanding the stream for values greater than the mean, contracting for values below. The fifth parameter – wheel speed – is directly related to the water speed and is thus guiding the particle speed. This experiential, process-based, anecdotal, slowly unravelling form of visualization, evoking thoughts of water and wood at the same time, reflects our experience of this highly unusual project after visiting the flowmill ourselves. It became clear that the precise values of the sensors themselves are only side actors in a larger, association-rich and quite unusual system, which we wanted to reflect in our work. Also, the anecdotal nature of the measurements (only every half hour, with quite varying results) called for a treatment of the values beyond a simple line or area chart, so we decided to represent each “data anecdote” in a like-wise closed, single anecdotal visualization, representing the situation at a given, but ultimately arbitrary point in time. Also, the imprecision in visual translation did not happen without thought, in fact, an overly precise display of the values would have, ironically, resulted in “lying about the data”, given the imprecise nature of the system generating the values. (Compute that, line chart afficionados.)

Scaling and transforming real-time sensor data in a robust manner is always tricky.To get the data into a form that it was handleable and also allowed comparisons across the very different scales in the different variables (with values ranging from fractions of zero to hundreds), we employed a z-score scaling, which centers the data around zero (i.e. “usual” data points lie around zero), and also scales the data such that around two thirds of the data lie between -1 and 1. This helps both in using the values in a predictable way, but also allowing quick identification of high, low, or average values, without having to learn different scales across variables – in the end, who knows, if a salinity value of 238 is high or low for a North English river.

Finally, I want to share one dismissed approach which was hard to control, and aesthetically not exactly the thing we were after, but quite interesting nevertheless:

Based on Kyle McDonald’s code, which in turn was based on John McCabe’s explorations of multi-scale Turing patterns, we toyed with the idea of trying to force the algorithm to produce blobs of specific sizes by “injecting” black pixels while the algorithm was running. Also, we muted all but the bottom and a few top layers, resulting in a bigger difference between micro- and macro-structure. In the end, the computations turned out to heavy to be run directly in the browser, and the code a wee bit too unpredictable, so we went with a more controllable and visually more fitting approach. Right now, the code is not quite ready for sharing, but I can offer to clean up and upload the code, in case anyone has a strong interest.

Muesli Ingredient Network

I put a little new project online, analyzing which ingredients were ordered together most often in custom muesli mixtures from mymuesli.com customers. Besides chocolate, nuts and oat, the project description also features a little excursion on matrix views of networks, and conditional probabilities.

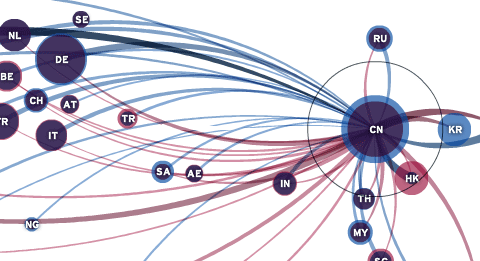

Global Trade Flows

Finally, at least some documentation for the “Global Trade Flows” project I completed last year for citibank. Unfortunately, I cannot publish the full interactive tool, but I hope the screenshots are informative enough.

Data Stories

I started a podcast series — together with Enrico Bertini — named DATA STORIES. Exciting!

WEF Risk Report 2012

For the third year in a row, I was responsible for a good deal of the graphics in the annual Global Risk Report published by the World Economic Forum. For the report, hundreds of experts take part in a survey on their perception of what they consider the most important global risk and their inter–dependency.

Three types of graphics are at the heart of the report:

The “crystal” network diagram sheds light on the “Centers of Gravity” (systemically most important risks) in each category (Economic, Environmetal, Societal, Geopolitical, and Technological Risks) and the risks strongest connected to these. The network was layed out in d3.js, using force-directed layout and a “magnetic” grid for regular spacing and to avoid overlaps. The centers of gravity and the 4 most important connectors were fixated manually in this process, to enforce the “crystal” structure. This rough layout was then imported into Illustrator and refined and tweaked by hand. There is also a version which shows a cloud of all risks in the background, but I think this one obscures the conceptual/diagrammatic nature of the original, so personally, I prefer the cleaner version.

The same interconnectivity information can be explored in an “orbit” visualization that plays a bit on the gravity theme established in the survey. Clicking a risk will put it into the center and show how strongly the other risks are connected by how close or far away they are located – a very simple, but quite effective and clean approach to network visualization, by getting rid of the lines altogether and just working with size and distance to express connectivity.

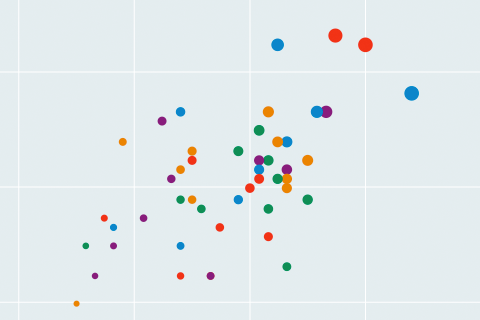

Last, but not least, we have a simple cartesian plot arranging the risks by impact and likelihood. As we gathered some information on the respondents’ region of residence and their stakeholder group, you can explore how, for instance, Asian experts’ perception on economic issues differs from the rest of the respondents.

All interactive visualizations were implemented using jQuery, underscore.js, and raphael.js. For the network visualization, I used the force directed layout from d3.js.

Make sure to consume the full interactive report or in pdf form.